Pre-Onboarding for GCP Billing Accounts

Introduction

This user guide will explain how to perform the pre-onboarding steps required for onboarding a GCP Cloud Billing Account into the platform.

Pre-onboarding

GCP Projects can be onboarded as a Billing Account in the platform. Onboarding a Billing Account allows you to discover the cost information of all its linked GCP Projects.

However, before your GCP project can be onboarded into the platform, there are certain prerequisites that need to be met.

Set Up Cloud Billing Data Export to BigQuery:

To allow your Cloud Billing usage costs and/or pricing data to be exported to BigQuery, please do the following:

-

in the Google Cloud console, go to the Billing export page.

-

Click to select the cloud Billing account for which you would like to export the billing data. The Billing export page opens for the selected billing account.

-

On the BigQuery export tab, click Edit settings for each type of data you'd like to export. Each type of data is configured separately.

-

From the Projects list, select the project that you set up which will contain your BigQuery dataset.

The project you select is used to store the exported Cloud Billing data in the BigQuery dataset.

- For standard and detailed usage cost data exports, the Cloud Billing data includes usage/cost data for all Cloud projects paid for by the same Cloud Billing account.

- For pricing data export, the Cloud Billing data includes only the pricing data specific to the Cloud Billing account that is linked to the selected dataset project.

-

From the Dataset ID field, select the dataset that you set up which will contain your exported Cloud Billing data.

For all types of Cloud Billing data exported to BigQuery, the following applies:

-

The BigQuery API is required to export data to BigQuery. If the project you selected doesn't have the BigQuery API enabled, you will be prompted to enable it. Click Enable BigQuery API to enable the API.

-

If the project you selected doesn't contain any BigQuery datasets, you will be prompted to create one. If necessary, follow these steps to create a new dataset.

-

If you use an existing dataset, review the limitations that might impact exporting your billing data to BigQuery, such as being unable to export data to datasets configured to use customer-managed key encryption.

For pricing data export, the BigQuery Data Transfer Service API is required to export the data to BigQuery. If the project you selected doesn't have the BigQuery Data Transfer Service API enabled, you are prompted to enable it. If necessary, follow these steps to enable the API.

-

-

Click Save.

GCP Project Permissions

The following permissions must be configured in your GCP Project before onboarding.

User Account Permissions:

- A user account must be created in GCP with the following permissions:

- Project Editor (View and Modify.)

- Billing Account Admin (This is required for exporting the billing data to a BigQuery dataset. If the data is already exported, then this permission is not required.)

- BigQuery Admin (If the BigQuery dataset exported is in a different project, you need this access to retrieve the BigQuery dataset name. )

- In GCP, enable API Access for the Cloud Resource Manager API, Recommender API, Cloud Billing API, and Compute Engine API in the API & Services – Library screen.

- When it comes to onboarding Google Cloud Platform (GCP) billing accounts into CoreStack, one method that might be best for certain situations is to use the GCP cloud shell interface. Execute the below command to download the shell script in GCP CLI and run it to help in onboarding the GCP Billing Account.

- wget https://storage.googleapis.com/gcp-onboarding-script/Corestack-Onboarding/GCP-Billing%20Account.sh

- A service account must be created in GCP with the following permissions:

- Project Viewer (Read only).

- Schedule queries must be created in the GCP BigQuery console.

- Create a Bucket for a BigQuery data transfer (under the same GCP Project where BigQuery exists).

- Login to the GCP console.

- Navigate to the Storage - Browser screen.

- Click Create bucket. The Create bucket screen appears.

- Provide a unique value in the Name your bucket field along with the other details required to create the bucket.

- Click the Create button.

- Copy the value provided in the Name your bucket field.

- Login to the GCP console.

- Navigate to the GCP BigQuery console.

- On the left menu, click Schedule Queries. The schedule queries list appears.

- Click Create Schedule Queries located at the top of the page.

- Copy each schedule query (Daily Schedule Query, Monthly Schedule Query, and On-demand Schedule Query).

- For the Daily Schedule Query, schedule it on an hourly basis.

- For the Monthly Schedule Query, schedule it on the fifth of every month.

- For On-demand Schedule Query, run it in real time.

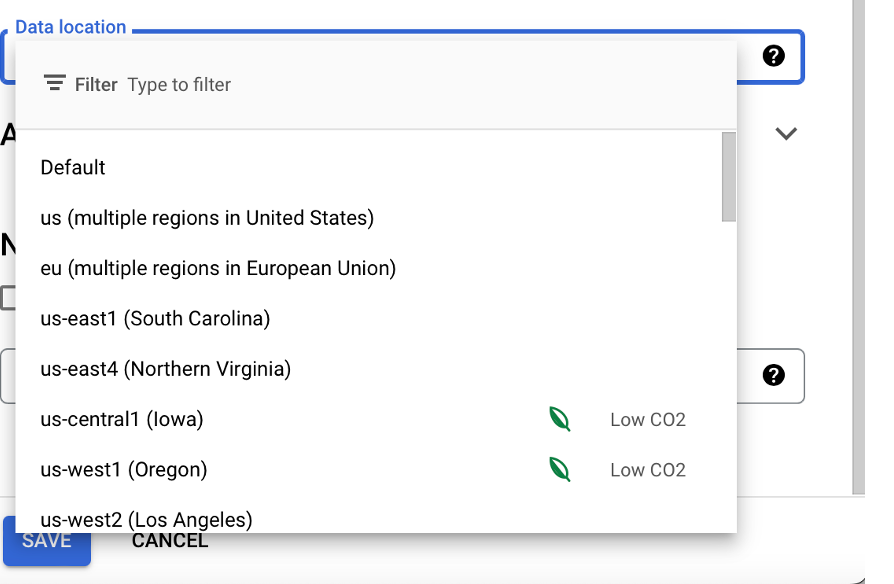

- Select the Data location for the query to be executed.

- Navigate to the Credentials screen.

- Click Create credentials and select Service account. The Create service account page appears.

- Provide the necessary details to create a service account: Name, ID, and Description.

- Click the Create button.

- Click Select a role to select the required roles.

- Click the Continue button.

- Click Create key.

- Select JSON as the Key type.

- Click the Create button. A JSON key file will be downloaded.

- Click Done.

- Login to the GCP console.

- Navigate to the Credentials screen.

- Click Create credentials and select OAuth client ID.

- Select Web application in the Application type field.

- Specify the following URI in the Authorized redirect URIs by clicking the Add URI button:

- Click the Create button. The Client ID and Client secret values will be displayed.

- Navigate to the Projects screen in the GCP console.

- The Project ID will be displayed next to your GCP project in the project list.

- Construct a URL in the following format:

- Open a browser window in private mode (e.g. InPrivate, Incognito) and use it to access the above URL.

- Login using your GCP credentials.

- The page will be redirected to the Redirect URI, but the address bar will have the Authorization Code specified after

code=.

API Access:

Automated Approach for GCP Billing Account Onboarding:

Service Account Permissions:

Billing Account Prerequisites:

Note:

The Bucket, Dataset and Scheduled Query created should be in the same location for the schedule query to execute successfully.

Bucket Name:

Schedule Queries in GCP

Next, you need to schedule some queries in GCP. Navigate to the Schedule Query Page in the GCP console then follow the steps below:

Note:

The Data location selected should be the same as what was selected for Bucket and the Dataset.

Note:

For the code snippets below, you need to insert your table id and bucket name.

Daily Schedule Query

DECLARE bucket_name STRING DEFAULT '<your bucket name>';

DECLARE current_date DATE DEFAULT CURRENT_DATE;

-- Daily

DECLARE start_date TIMESTAMP DEFAULT TIMESTAMP(DATE_TRUNC(current_date, MONTH), 'US/Pacific');

DECLARE end_date TIMESTAMP DEFAULT TIMESTAMP(DATE_TRUNC(DATE_ADD(current_date, INTERVAL 1 MONTH), MONTH), 'US/Pacific');

DECLARE bucket_path STRING DEFAULT CONCAT('gs://', bucket_name, '/', FORMAT_DATE('%G-%m', current_date), '/', FORMAT_DATE('%F', current_date), '/*.csv');

EXPORT DATA OPTIONS (uri=(bucket_path), format='JSON', overwrite=True) AS

SELECT

*, (SELECT STRING_AGG(display_name, '/') FROM B.project.ancestors) organization_list

FROM `<complete table id>` as B

WHERE usage_start_time >= start_date AND usage_start_time < end_date AND _PARTITIONTIME >= TIMESTAMP(EXTRACT(DATE FROM start_date)) ;

Monthly Schedule Query

DECLARE bucket_name STRING DEFAULT '<your bucket name>';

DECLARE current_date DATE DEFAULT CURRENT_DATE;

--Backfill

DECLARE start_date TIMESTAMP DEFAULT TIMESTAMP(DATE_TRUNC(DATE_SUB(current_date, INTERVAL 1 MONTH), MONTH), 'US/Pacific');

DECLARE end_date TIMESTAMP DEFAULT TIMESTAMP(DATE_TRUNC(DATE_ADD(EXTRACT(DATE FROM start_date), INTERVAL 1 MONTH), MONTH), 'US/Pacific');

DECLARE bucket_path STRING DEFAULT CONCAT('gs://', bucket_name, '/', FORMAT_TIMESTAMP('%G-%m', start_date), '_backfill/*.csv');

EXPORT DATA OPTIONS (uri=(bucket_path), format='JSON', overwrite=True) AS

SELECT

*, (SELECT STRING_AGG(display_name, '/') FROM B.project.ancestors) organization_list

FROM `<complete table id>` as B

WHERE usage_start_time >= start_date AND usage_start_time < end_date AND _PARTITIONTIME >= TIMESTAMP(EXTRACT(DATE FROM start_date)) ;

On-Demand Scheduled Query

DECLARE bucket_name STRING DEFAULT '<your bucket name>';

DECLARE current_date DATE DEFAULT CURRENT_DATE;

--Backfill

DECLARE start_date TIMESTAMP DEFAULT TIMESTAMP(DATE_TRUNC(DATE_SUB(current_date, INTERVAL 1 MONTH), MONTH), 'US/Pacific');

DECLARE end_date TIMESTAMP DEFAULT TIMESTAMP(DATE_TRUNC(DATE_ADD(EXTRACT(DATE FROM start_date), INTERVAL 1 MONTH), MONTH), 'US/Pacific');

DECLARE bucket_path STRING DEFAULT CONCAT('gs://', bucket_name, '/', FORMAT_TIMESTAMP('%G-%m', start_date), '_backfill/*.csv');

EXPORT DATA OPTIONS (uri=(bucket_path), format='JSON', overwrite=True) AS

SELECT

*, (SELECT STRING_AGG(display_name, '/') FROM B.project.ancestors) organization_list

FROM `<complete table id>` as B

WHERE usage_start_time >= start_date AND usage_start_time < end_date AND _PARTITIONTIME >= TIMESTAMP(EXTRACT(DATE FROM start_date)) ;

DECLARE bucket_name STRING DEFAULT '<your bucket name>';

DECLARE current_date DATE DEFAULT CURRENT_DATE;

--Backfill

DECLARE start_date TIMESTAMP DEFAULT TIMESTAMP(DATE_TRUNC(DATE_SUB(current_date, INTERVAL 2 MONTH), MONTH), 'US/Pacific');

DECLARE end_date TIMESTAMP DEFAULT TIMESTAMP(DATE_TRUNC(DATE_ADD(EXTRACT(DATE FROM start_date), INTERVAL 1 MONTH), MONTH), 'US/Pacific');

DECLARE bucket_path STRING DEFAULT CONCAT('gs://', bucket_name, '/', FORMAT_TIMESTAMP('%G-%m', start_date), '_backfill/*.csv');

EXPORT DATA OPTIONS (uri=(bucket_path), format='JSON', overwrite=True) AS

SELECT

*, (SELECT STRING_AGG(display_name, '/') FROM B.project.ancestors) organization_list

FROM `<complete table id>` as B

WHERE usage_start_time >= start_date AND usage_start_time < end_date AND _PARTITIONTIME >= TIMESTAMP(EXTRACT(DATE FROM start_date)) ;

DECLARE bucket_name STRING DEFAULT '<your bucket name>';

DECLARE current_date DATE DEFAULT CURRENT_DATE;

--Backfill

DECLARE start_date TIMESTAMP DEFAULT TIMESTAMP(DATE_TRUNC(DATE_SUB(current_date, INTERVAL 3 MONTH), MONTH), 'US/Pacific');

DECLARE end_date TIMESTAMP DEFAULT TIMESTAMP(DATE_TRUNC(DATE_ADD(EXTRACT(DATE FROM start_date), INTERVAL 1 MONTH), MONTH), 'US/Pacific');

DECLARE bucket_path STRING DEFAULT CONCAT('gs://', bucket_name, '/', FORMAT_TIMESTAMP('%G-%m', start_date), '_backfill/*.csv');

EXPORT DATA OPTIONS (uri=(bucket_path), format='JSON', overwrite=True) AS

SELECT

*, (SELECT STRING_AGG(display_name, '/') FROM B.project.ancestors) organization_list

FROM `<complete table id>` as B

WHERE usage_start_time >= start_date AND usage_start_time < end_date AND _PARTITIONTIME >= TIMESTAMP(EXTRACT(DATE FROM start_date)) ;

Retrieving Onboarding Information from the GCP Console

Based on the authentication protocol being used in CoreStack (refer to the options below for guidance), certain information must be retrieved from the GCP console.

Service Account protocol:

A service account must be created in your GCP Project. Then, you need to create a service account key and download it as a JSON file. Also, the Project ID must be retrieved from your GCP Project.

How to Download the Credentials File (JSON):

Project ID:

Refer to the steps in the Project ID topic of the OAuth2 Based section above.

Provide the JSON and Project ID while onboarding the GCP Project in CoreStack when using the Service Account option.

OAuth2 protocol:

The following values must be generated/copied from your GCP Project and configured in CoreStack.

Client ID & Client Secret:

https://corestack.io/

Scope:

The OAuth 2.0 scope information for a GCP project can be found at: https://www.googleapis.com/auth/cloud-platform.

Project ID:

The project ID is a unique identifier for a project and is used only within the GCP console.

Redirect URI:

The following redirect URI that is configured while creating the Client ID and Client Secret must be used:

https://corestack.io/

Authorization Code:

The authorization code must be generated with user consent and required permissions.

https://accounts.google.com/o/oauth2/auth?response_type=code&client_id=<Client ID>&redirect_uri=<Redirect URI>&scope=https://www.googleapis.com/auth/cloud-platform&prompt=consent&access_type=offline

Note:

The values retrieved in the earlier steps can be used instead of

<Client ID>and<Redirect URI>specified in the URL format.

Copy these details and provide them while onboarding your GCP Project into CoreStack when using the OAuth2 option.

GCP Billing Impact Due to Onboarding

Refer to the GCP pricing details explained in the table below.

| Area | Module | Resources Created Through the Platform | Pricing | References |

|---|---|---|---|---|

| CloudOps | Activity Configuration | - Cloud Pub/Sub-Topic - Subscription - Sink Router | Throughput cost: Free up to 10 GB for a billing account and $40 per TB beyond that. Storage cost: $0.27 per GB per month. Egress cost: This depends on the egress internet traffic, and costs are consistent with VPC network rates. For details, refer to: https://cloud.google.com/vpc/network-pricing#vpc-pricing | https://cloud.google.com/pubsub/pricing#pubsub |

| CloudOps | Alerts Configuration | - Notification Channel - Alert Policies | Alert Policies: Free of charge. Monitoring: $0.2580/MB: first 150–100,000 MB. $0.1510/MB: next 100,000–250,000 MB. $0.0610/MB: >250,000 MB. | https://cloud.google.com/stackdriver/pricing#monitoring-pricing-summary |

| SecOps | Vulnerability | Need to enable GCP Security Command Center (Standard Tier) | GCP Security Command Center (Standard Tier): Free of charge. | https://cloud.google.com/security-command-center/pricing#tier-pricing |

| SecOps | Threat Management (Optional) | Need to enable GCP Security Command Center (Premium) | GCP Security Command Center (Premium): If annual cloud spend or commit is less than $15 million, then it's 5% of committed annual cloud spend or actual annual current annualized cloud spend (whichever is higher). | https://cloud.google.com/security-command-center/pricing#tier-pricing |

| FinOps | BigQuery Billing Export | Need to enable standard export and create a Dataset | Storage cost for dataset: $0.02 per GB (First 10 GB is free per month). | https://cloud.google.com/bigquery/pricing#storage |

| FinOps | Scheduled Queries | - Daily Scheduled Query - Monthly Scheduled Query - On-demand Scheduled Query | $5 per TB (First 1 TB is free per month). | https://cloud.google.com/bigquery/pricing#on_demand_pricing |

| FinOps | Transferred Billing Data | Storage Bucket | $0.02–$0.023 per GB per month. | https://cloud.google.com/storage/pricing#price-tables |

Note:

These charges are based on the GCP pricing references. Actual cost may vary based on volume and consumption.

Updated 27 days ago